How do search engines work

In a world before search engines, we had directories and encyclopedias to search for information. It took a precious amount of time to go through all the listed items. With the advent of search engines, the same search can be accomplished within fractions of a second.

A search engine is a software application developed to search the Internet or the World Wide Web for a specified item in such a way that it brings out the best possible search result. The user interface of a search engine has a text box in which the user can type the items to search for information. The results of a search are displayed in an ordered list of links, articles, images, videos, scholarly articles etc. This page is known as the Search Engine Result Page or SERP. Search Engines use efficient algorithms to perform the searches. There are three important tasks to carry out this process:

- Web crawling (Observation)

- Indexing (Organisation)

- Searching (Categorisation)

1. Web crawling

The search engine has to deliver the most relevant results to the user search queries. For this purpose, it has to find out the information existing across the world wide web. This process of observation of data is carried out by sending teams of special robots called spiders or crawlers, that crawl from one website to another, observing the content to gather data. The content can be anything like image, video, text etc. It maintains a file called robots.txt which is a standardised file containing the directions and commands for the search robots to which page to crawl next, and which page not to crawl. Many factors decide how much time to spend on a page. The robot crawls the page and sends information about it to be indexed. A website can also invite the crawlers to their site to get a place an index.

In the case of confidential content, it is also possible to block the crawlers so that they don’t access the web page. In this case, the file named robots.txt is helpful. This file can be created and placed in the root folder of a website. It tells the crawler which parts of the website should not be crawled. It also keeps the information about the speed at which the crawler should crawl this site. If a search engine crawler does not find a robot.txt file, it proceeds to crawl the site. Otherwise, it follows the directives in the file.

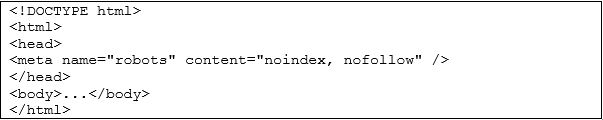

If a webpage has confidential content, it is not enough to make a robot.txt file to stop the access and indexing by a search engine. Malicious users can still make special bots to crawl that website. So, to save the content from public sharing it should be implementing authentication and authorisation policies as well as the NoIndex technique to save the data. The NoIndex technique is using a tag called NoIndex in the head section of the web page. This tag indicates to the search engine that indexing this website is prohibited. We discuss

2. Indexing

The data found by the crawlers is analysed based on its quality. If the data is up-to-date, valuable, relevant, competitive, original and authentic, it gets a place in the index. The index is an enormous database where the search engine organises and saves the information gathered by the crawlers. This includes linking the keywords and specific indicators of a web page to its domain name. This process is called indexing. The indexing is done in a database which enables the search engine to give a quick response to the queries of the user. It is like an appendix at the end of a book. All the words on a web page make an entry to the index. According to Google, its search database (index) contains hundreds of billions of web pages.

3. Ranking

Now that the search engine has maintained an index of information about keywords offered by all the web pages, it can quickly present the related results according to the keywords typed by the user. The order of the web pages to be presented in the search results is from most relevant to the least relevant. Search engines also give advanced search options which include filtering and sorting to refine the search results. For example, the user can filter the result according to a selected date, or year, or location so that the results are narrowed down accordingly. Users can make use of Boolean operators like AND, OR and NOT to filter their search results.

It is important to know that different search engines use different algorithms for ranking. Therefore, the search results might differ. A web page that is ranked highest in one search engine result page might not be the highest in another search engine result page.

Some important points considered for ranking include:

- Location: For example, the users might need to find a doctor near their location.

- Language: If the language detection is enabled/possible the search engine returns the results in user language preference.

- Search History: Different search engines respond to the same query differently. This is because user search history affecting the result.

Indexing in Detail

The search engine index might include the following information about a website:

- Keyword: What are the topics discussed on the web page?

- Content: What is elaborated on in the topic?

- Date: When was the page last modified?

- Engagement: How long the users engage with the web page?

This section discusses the process of indexing and related techniques in detail.

1. Index design factors

Index design factors are the architectural considerations for a search engine. To carry out the Indexing process a search engine requires to consider the following design factors:

1.1. Merge Factors

- Analysis of data to check if it is latest, old or up-to-date.

- Determining how the data should be saved in the Index.

1.2. Storage techniques

- Determining the technique to save data. For example, compress the large files to a smaller size, or filtered the information to narrow down the options.

1.3. Index Size

- Determining the size of computer storage required for the indexing database

1.4. Lookup Speed

- Determining the speed of searching for a word in the Inverted Index and presenting the result.

- Determining the speed of updating data in the index.

1.5. Maintenance

- Determining the quantity and size of Index required to maintain over some time

- Determining the frequency of updates to the Index

1.6. Fault Tolerance

- Determining how reliable the Index of a search engine is.

2. Index data structures

For Indexing, the Search Engine categorises and rank web pages in several ways. These data structures are discussed below:

2.1. Suffix Tree/Suffix Array

- The Search Engine saves the suffixes of keywords in a tree structure called Suffix Tree. It is a linear time lookup that supports hashing. Hashing is an important technique for search engine indexing. It might need space more than the space required to store the word itself. Therefore, another data structure called Suffix Array can be used alternatively. A suffix array is said to be using less storage space and memory. It is an associative array with keys and values.

2.2. Inverted Index/ Reverse Index

- The search engine makes an Inverted Index by taking data from full-text searches and listing its occurrence and location in the form of a hash table.

2.3. Citation Index

- The search engine makes Citation Index organise the hyperlinks and references between the web pages for citation analysis.

2.4. N-gram Index

- The search engine makes Ngram Index perform data mining and other retrieval techniques on the sequence of the length of data stored.

2.5. Document-term matrix

- The search engine makes a document-term matrix to organise the occurrence of words in documents. This is a two-dimensional sparse matrix. A sparse matrix is a special type of matrix in numerical analysis where most elements are zero and only a few elements are non-zero. They require less space for data storage because the sparse data supports compression.

- So, the search engine uses these data structures along with architecture to build an index to quickly display the most relevant search results.

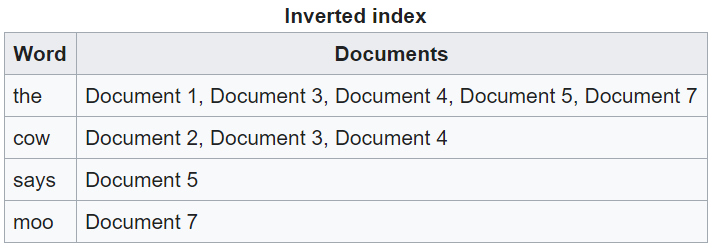

3. Inverted indices

The indexing of the search keywords takes up a lot of storage space and other resources. So, the search engines refine their database using a system called Inverted Index. In this system, the text elements and their indicators are compressed and edited to reduce them to the most specific words and their meanings. This process is called tokenisation. It makes an index in which there is a list of documents showing the occurrence of a specific word. The search engine can directly access these documents when a user query for that word is made. This process needs fewer resources and storage space while making the search results faster. Following table shows an example of the inverted index:

Table Source: https://en.wikipedia.org/wiki/Search_engine_indexing

As shown in the table, this index lists the documents having the occurrence of the specific word. However, it does not help in ranking the documents from most relevant to least relevant because no other detail is attached. Such an index is called is Boolean index because it shows yes or no for the presence of a word. If the frequency of the occurrence of a word in a document is added to this index, it will help the search engine to list the documents according to their relevancy. This additional information will also help the search engine to search for related phrases and compound terms.

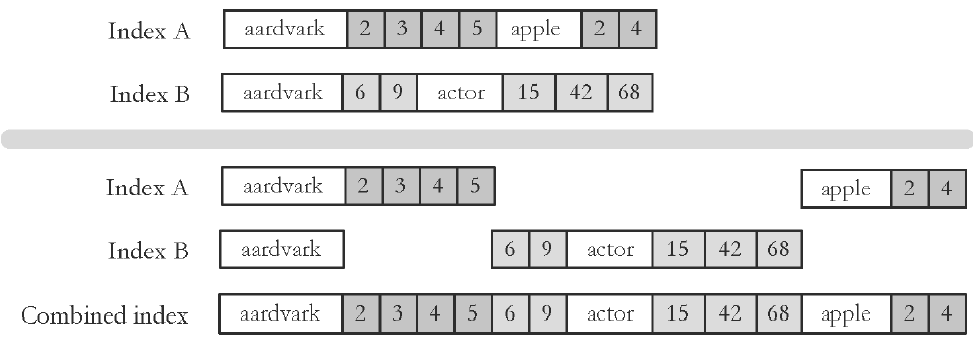

4. Index merging

The design of the inverted index is enhanced by Index merging. It deals with the problem of low storage space and memory. In this strategy, partial lists of documents are created and saved on the disk. When all the partial indexes have filled the disk, they are merged, sorted in alphabetical order, according to their words and occurrences. The Figure shows an example of Index Merging.

Figure Source: http://www.cs.cornell.edu/courses/cs4300/2013fa/lectures/index-construction-2-4pp.pdf

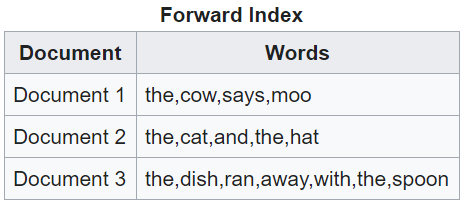

5. The forward index

The forward index, as the name states, is the inversion of the inverted index. As soon as a document is parsed, the forward index is quickly made, storing the list of words occurring in a specific document. This is shown in the following example:

Table Source: https://en.wikipedia.org/wiki/Search_engine_indexing

This forward index is then transformed into the Inverted Index with compressed data. It helps to avoid the blockage of the Inverted Index updates.

6. Compression

The most important thing in search engine indexing is the Inverted Index. This index should be accessed efficiently and quickly to respond to a user search query. Since the inverted index stores an enormous amount of data, it needs to be compressed. The compression of the Index not only helps in faster information retrieval but also minimises the amount of storage space required. So, the compression techniques must be such that they save time and storage space. In literature, there are many compression techniques to make the process better and keep the index high in memory. It also depends upon how much cost a search engine provider is willing to spend on its algorithms and computer resources.

Document parsing

When a web page is crawled by the search engine robot, it is parsed to save the information into a Search Index. A web page contains HTML tags to specify the layout of the document. The document is parsed according to the syntax of HTML tags. This is called Syntactic Analysis. The text is parsed into sentences or words, the white spaces, punctuation and common words are removed, while the important words are saved. A Document Object Model is created which tells the structure as well as the content of the web page. This model is used for the construction of the Index.

1. Tokenisation

The computers have to be programmed to parse a document and decide if the next word needs to be saved or not. This is done by a tokeniser. Tokenisation is a technique in which the text is compiled and reduced to its fundamental meaning. It can also identify email addresses, hyperlinks and digits. Each token has related information stored with it. This includes details about whether it is in upper case or lower case, what part of speech it belongs to, what is the position in a sentence, and a lot more. Tokenisation is used to make the process of presenting search results faster. It also helps reduce the number of computer resources required, for example, data storage and processors.

2. Language recognition

The search engine needs to recognise the language in which the user has submitted the search query. This is one of the first steps in search indexing. When parsing a document, it is important to save the grammatical details of the token. This cannot be done without knowing the language of the document.

3. Format analysis

Before a document is processed by a tokeniser, it is important to know the format of the document. The source code of a web page includes tags that specify the layout as well as the actual content of the page. It is easily parsed if the search engine supports only a single markup language. This is not the case in reality. There are many markup languages available today in which a web page can be written. This implies that the search engine must know the difference between markup tags and the content found on a page. So, the first task in parsing a document would be investigating the format of the web page. HTML, XML, PDF, DOC, PPT etc. are some of the commonly used formats. Sometimes, the owners of these formats also provide the format parser to deal with format analysis.

4. Meta tag indexing

Markup languages like HTML allow the developer to mention the data about the document. For example, the title, author, important keywords contained in the document and language can be specified. These tags help the process of document indexing.

Optimisation of Indexing for a Website

If we have launched a new website, e.g., an online business, we would want to reach the market quickly. In such a case we would want the search engines to index our websites quickly. Similarly, if we have updated the content of our web page and we would like to search engines to notice it quickly, we perform optimisation techniques. This is especially helpful if we have content that requires a quick to market approach.

To check how a search engine had indexed a webpage is to type “site:yourdomain.com” in the text field provided for advanced search in the search engine console.

In this section, we discuss a few techniques to make Indexing faster for our website. These techniques include:

1. XML Sitemaps

An XML site map is an important way to invite and guide the crawler to a website. The site map includes the dates when the site was last modified as well as the list of web pages that comprise the site.

2. Robots Meta Directions/ Robot Meta Tags

The Robots meta tags describe the data about the content inside a web page. For example, the heading and title tag element. They guide the crawler by providing them with directions about where to hop next. Meta tags are part of the code and don’t appear on the output page. Following are some commonly used meta directives:

- 2.1. Index/NoIndex: It tells the search engine whether to index a website or not. By default, the search engines index all the sites they crawl through. So, this tag is used only in case of blocking the indexing.

- 2.2. Follow/Unfollow: It tells the search engine whether to follow the links on the web page or not. By default, the search engine follows all the links. This tag is mostly used in conjunction with the NoIndex tag.

- 2.3. NoArchive: This tag tells the search engine to use a new updated copy of the web page instead of an old cached copy. This is especially helpful in e-commerce websites where the contents are continually updated.

It is important to note that the robot meta tags are not effective for putting restrictions on crawling. They are only restricted from indexing. Logically, the search engine cannot find the instructions in robot.txt until it crawls to a webpage.

Example: Following is the HTML code that is used to restrict the search engine indexing:

3. Fetch as Google

This command is available in Google Search Engine Interface. Fetch as Google command indexes a website whose URL is submitted and requested for Indexing.

4. Submit URL

We can submit a request to any search engine to crawl our website.

5. Hosting Content on a Search Engine Platform

Websites need web hosts where they store their files and can be accessed by the public. If we choose a web hosting service provided by the search engine platform under the same name, they might give preference and increase the chances of being indexed quickly. An example of such a hosting is Google’s mobile application platform, called Google Firebase. It gives access to the Google search engine without the need for a guide for crawling. This will increase the chances of indexing by the Google search engine.

Conclusion

Search Engine Indexing is a process in which the documents are parsed to create Tokens to be saved in an enormous database called Index. The index contains the words as well as the list of documents where the words are found. This helps to provide an efficient response to the user search queries.

Read more about the Application of Hashing

References

- https://www.pageonepower.com/search-glossary/index

- https://www.deepcrawl.com/knowledge/technical-seo-library/search-engine-indexing/

- https://www.armyofflyingmonkeys.com/blog/so-what-actually-is-a-search-engine-index/

- https://www.searchenginejournal.com/search-engines/crawling-indexing/

- https://moz.com/beginners-guide-to-seo/how-search-engines-operate

- https://www.deepcrawl.com/knowledge/technical-seo-library/how-do-search-engines-work/